What Is Generative AI?

In the ever-evolving landscape of artificial intelligence, one captivating frontier stands out: generative AI. This subset of machine learning boasts a unique capability to breathe life into new content and data by delving into existing datasets. Unlike its task-oriented counterparts, generative AI does not merely solve problems; it creates entirely new and innovative outputs, spanning text, images, and audio.

Key Points

-

Generative AI is a subset of machine learning that creates fresh new content from existing datasets.

-

The difference between conventional AI and generative AI is that AI mimics human intelligence for specific tasks, while generative AI creates diverse outputs by learning from extensive datasets.

-

Examples of generative AI models include: ChatGPT, DALL-E, and DALL-E 2.

-

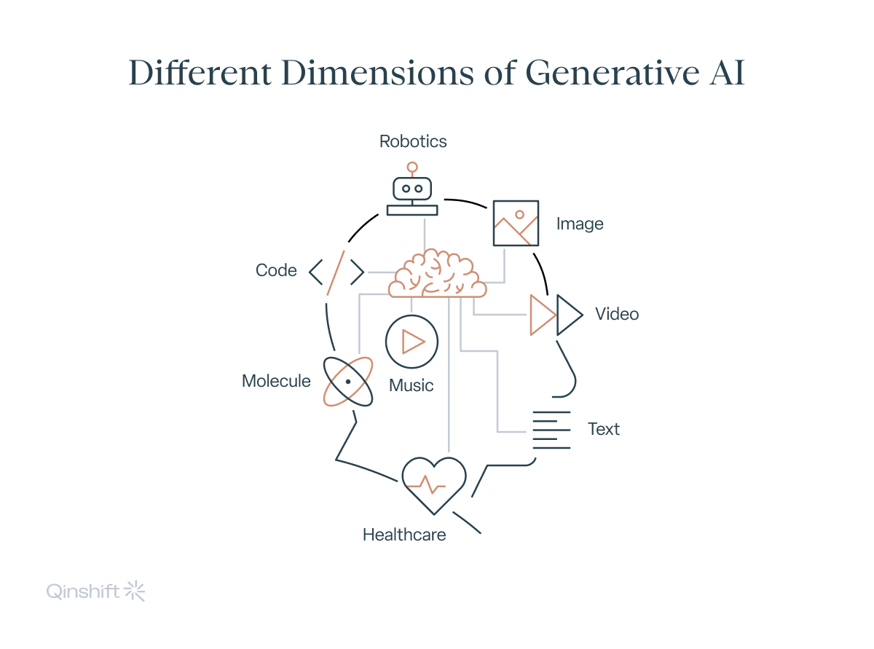

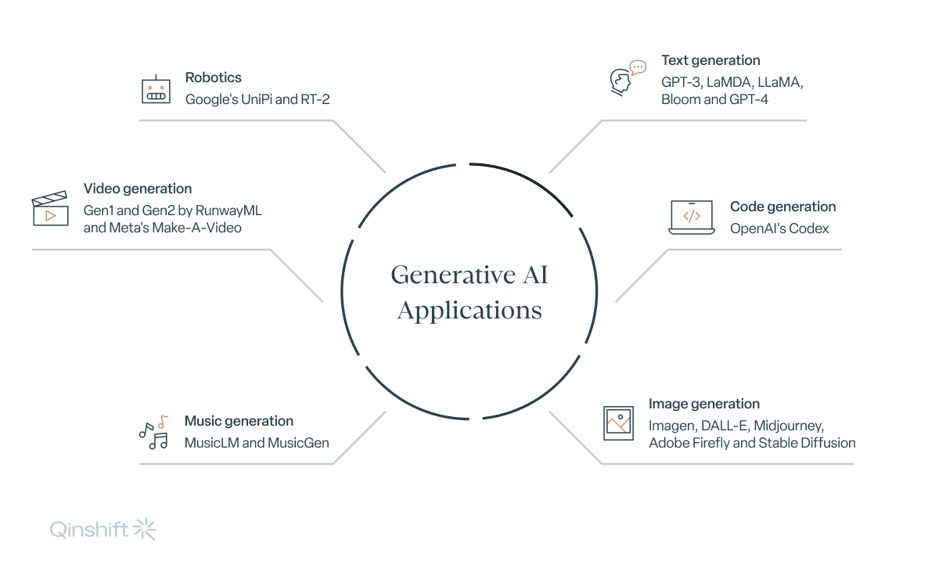

Generative AI can be applied to tasks such as text generation, code generation, image generation, music generation, video generation, healthcare-oriented tasks, molecule generation, and robotics.

-

Google, Mozilla, Meta, and Microsoft are leaders in developing generative AI. Apple invested USD 22.6 billion in generative AI and aims to introduce the Apple GPT chatbot. Meta designed its LlaMa: Large Language Model for AI research, and Google created Performance Max to automate ad targeting.

-

Major tech companies continue to research and develop generative AI (genAI) technologies.

What Is Generative AI?

Generative AI, a subset of machine learning, stands out as a field of algorithms and models, demonstrating the remarkable ability to create fresh new content or data by drawing insights from existing datasets.

In contrast to conventional AI systems that excel at solving specific tasks, generative AI goes beyond, generating entirely new and innovative outputs. These systems can digest vast and diverse datasets, “learning” from them to produce results that are statistically probable and distinct from the original data.

Generative AI’s proficiency extends across various domains, including text, images, and audio, where it demonstrates its creative efficiency through the delivery of diverse and genuine outcomes. This creativity emerges from generative AI simplifying and encoding training data into a model’s representation.

The origins of generative AI can be traced back to the domain of statistics, initially employed for analysing numerical data. However, the game-changing turn happened with the rise of deep learning, which enabled generative AI to tackle intricate data types like images and speech.

Among the pioneer models that propelled deep generative modelling was the Variational Autoencoder (VAE), introduced in 2013. VAEs played a pivotal role in enhancing the scalability and accessibility of deep generative models, setting the stage for much of today’s generative AI innovation.

What Is the Difference Between AI and Generative AI?

AI has become a part of our lives, often without us even realising it. We encounter it in voice assistants like Siri and Alexa, customer service chatbots on websites, recommendation systems on streaming platforms, and many other applications.

AI covers various technologies that aim to mimic human intelligence and perform various tasks. And generative AI is a sub-concept within the AI semantic realm — indicating AI that can create new content and outputs.

Artificial Intelligence (AI)

The development of algorithms, systems, and models enabling machines to mimic human intelligence and carry out tasks that typically require human cognitive abilities falls under the broad category of artificial intelligence.

This includes a variety of applications, from simple rule-based systems to sophisticated deep learning models.

Task-oriented AI systems are commonplace nowadays. They have been created to thrive on specific tasks or solve specific problems. Customer service chatbots, for instance, are trained to respond to user questions and help users navigate a website.

In many cases, AI systems rely on predefined rules and logic that humans develop. These rules govern the system’s behavior and decision-making processes. Another characteristic quality of AI systems is that they typically rely on human-designed rules more than on data-driven learning. They may use data for optimization and performance improvement, but human experts often guide the learning process.

Generative AI

As mentioned, generative AI represents a specialised branch within AI. Examples of genAI, such as ChatGPT, DALL-E, and DALL-E 2, can create entirely new content, including text, images, audio, and more, based on patterns and information they have learned from existing datasets.

These models are heavily reliant on data-driven learning. They “learn” patterns and information from extensive datasets, enabling them to generate creative and novel outputs.

ChatGPT is a prime example of Generative AI. The “GPT” in its name stands for “generative pretrained transformer”.

ChatGPT is a language model that can generate human-like text based on the input it receives. It doesn’t rely on predefined rules but rather generates responses by drawing from vast amounts of text data it has been trained on. This allows the model to engage in natural language conversations and provide contextually relevant responses.

DALL-E and DALL-E 2 are examples of genAI image creation systems that generate images from textual descriptions. For instance, you can describe a concept or a scene in words, and DALL-E would create an image corresponding to that description.

Generative AI can be applied across different modalities of data. Here are some examples of it:

-

Text generation: systems like GPT-3, LaMDA, LLaMA, Bloom, and GPT-4 can understand, translate, and generate natural language text. Datasets such as BookCorpus and Wikipedia have played a crucial role in training these models.

-

Code generation: OpenAI’s Codex is an example of genAI trained on programming language text, thus able to generate source code for new computer programs.

-

Image generation: Imagen, DALL-E, Midjourney, Adobe Firefly, and Stable Diffusion produce high-quality visual art from text descriptions.

-

Music generation: MusicLM and MusicGen can be trained on audio waveforms and text annotations to create original musical compositions.

-

Video generation: Gen1 and Gen2 by RunwayML and Meta’s Make-A-Video are trained on annotated video data and can generate temporally coherent video clips.

-

Healthcare: Different gen AI models can help generate synthetic medical data to develop new drugs and design clinical trials.

-

Molecule generation: Systems using generative AI can be used in scientific research. Models trained on sequences of amino acids or molecular representations, such as SMILES notation, have had a key role in protein structure prediction and drug discovery. AlphaFold is an example in this category, utilising various biological datasets.

-

Robotics: Generative AI can also learn from the motions of robotic systems and generate new trajectories for motion planning or navigation. For instance, Google’s UniPi uses natural language prompts to control the movements of a robot arm, making human–robot interaction more intuitive and versatile. Multimodal models like Google’s RT-2 combine visual input and user prompts to perform complex tasks, such as picking up objects from a table covered with various items.

Google’s, Mozilla’s, Meta’s, and Microsoft’s Generative AI Implementations

Tech giants like Google, Mozilla, Meta, and Microsoft are the leaders in implementing generative AI technologies. While Bard, Bing, and Aria have gained recognition as web browser implementations, the tech industry’s focus on genAI goes beyond browsers.

In an interview with Reuters, Apple’s CEO emphasised the company’s USD 22.6 billion investment in generative AI research and development. He also unveiled an internal chatbot, nicknamed Apple GPT, which may have uses in Apple Care. Tim Cook has confirmed that Apple has been experimenting with generative AI “for years”.

In February 2023, Meta introduced the Large Language Model Meta AI (LlaMa). LlaMa is designed for researchers to advance their knowledge in the AI field, with 7 billion smaller models accessible to those with limited computational resources.

The advertising landscape is also being transformed, with Google’s Performance Max optimising campaign performance across diverse channels. The tool leverages machine learning to automate ad targeting, creative decisions, and placement of marketers’ ad dollars across Google’s advertising ecosystem.

Looking for AdTech & MarTech development services?

Discover how our teams can help you design, build and maintain advertising and marketing software.